Completely ignoring warning signals: how is that possible? On the 10thof April 2010, the Polish presidential airplane crashed. The president and his delegation, all killed on impact. It happened less than a kilometre from the airport.

National Geographic devoted an entire episode of Air Crash Investigationto the event. The investigators came across a very intriguing fact. During the descent, a cockpit alarm indicating a loss of height was howling away. Oddly enough, no one really responded to it. Even more disturbing: the only way the crew did respond was in manually turning off the alarm.

Allow me to explain why they did that. Flying an airplane too low when there is no airport in the neighbourhood is enough to set the alarm blaring. The Polish presidential airplane was a passenger airplane, but it often landed at military airports. As these military airports are not included in the database of passenger airports, the system interpreted the situation as if there was no airport. And so, any time the airplane was landing at a military airport, the alarm would sound. Pilots often landed at these airports. It’s understandable that they weren’t completely shocked by the howling siren. Of course, it was still an incredibly bothersome sound. And this explains why they would reset the altimeter alarm. Which is exactly what happened on this flight.

Ignoring alarms. It happens a lot. Especially in the corporate world. An alarm signal on a dashboard is almost always linked to a response such as, ‘Yes, but that’s just temporary, because …’ or ‘This doesn’t take … into account, so …’ What are the consequences?

It’s not just the value of the alarm signal that is nullified; the details of the other measurements or assessments also lose a great deal of their credibility.

We expect our measurements and assessments to reflect reality. But is this really the case? Whenever we assess or measure something, we run the risk of a possible error in the process. And then we need to decide: are our measurements accurate or not? To make this decision, we often set an arbitrary cut-off score. This score determines whether the measurement or assessment is positive or negative. Whether the dashboard display is red or green.

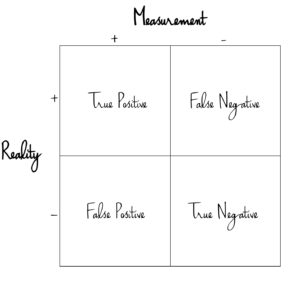

This cut-off score, in combination with our error, leaves us with four possible outcomes. And to explain the situation, we are using cancer screenings as an example:

- Our measurement was correct and we have a positive score (true positive) e.g. we checked for cancer and the patient has cancer.

- Our measurement was correct and we have a negative result (true negative) e.g. we found no sign of cancer and the patient does not have cancer.

- Our measurement was incorrect and we have a positive score (false positive) e.g. we found no sign of cancer, but the patient does actually have cancer.

- Our measurement was wrong and we have a negative score (false negative) e.g. we found cancer, but the patient doesn’t actually have cancer.

Where do we set our cut-off scores? We decide this ourselves. The settings for our cut-off scores determine the ratio of false negative and false positive outcomes. In the example that we gave, we would clearly prefer a false negative result to a false positive one. It’s better to mistakenly believe that someone has cancer and to have them examined more carefully than to send them home when they are actually sick. In order to avoid this happening, we set a higher cut-off score. If we set this threshold incorrectly? We end up with constant false negative results, and our measurements are then worthless.

In the example of the Polish airplane, the number of false positives was too high. The alarm lost its effect.

In these examples, there are human lives at stake. This is fortunately not the case in the corporate world. But the dashboard shouldn’t be turned into a pointless colour picture under any circumstances.